1. Hardware Acceleration: The Foundation of Embedded AI

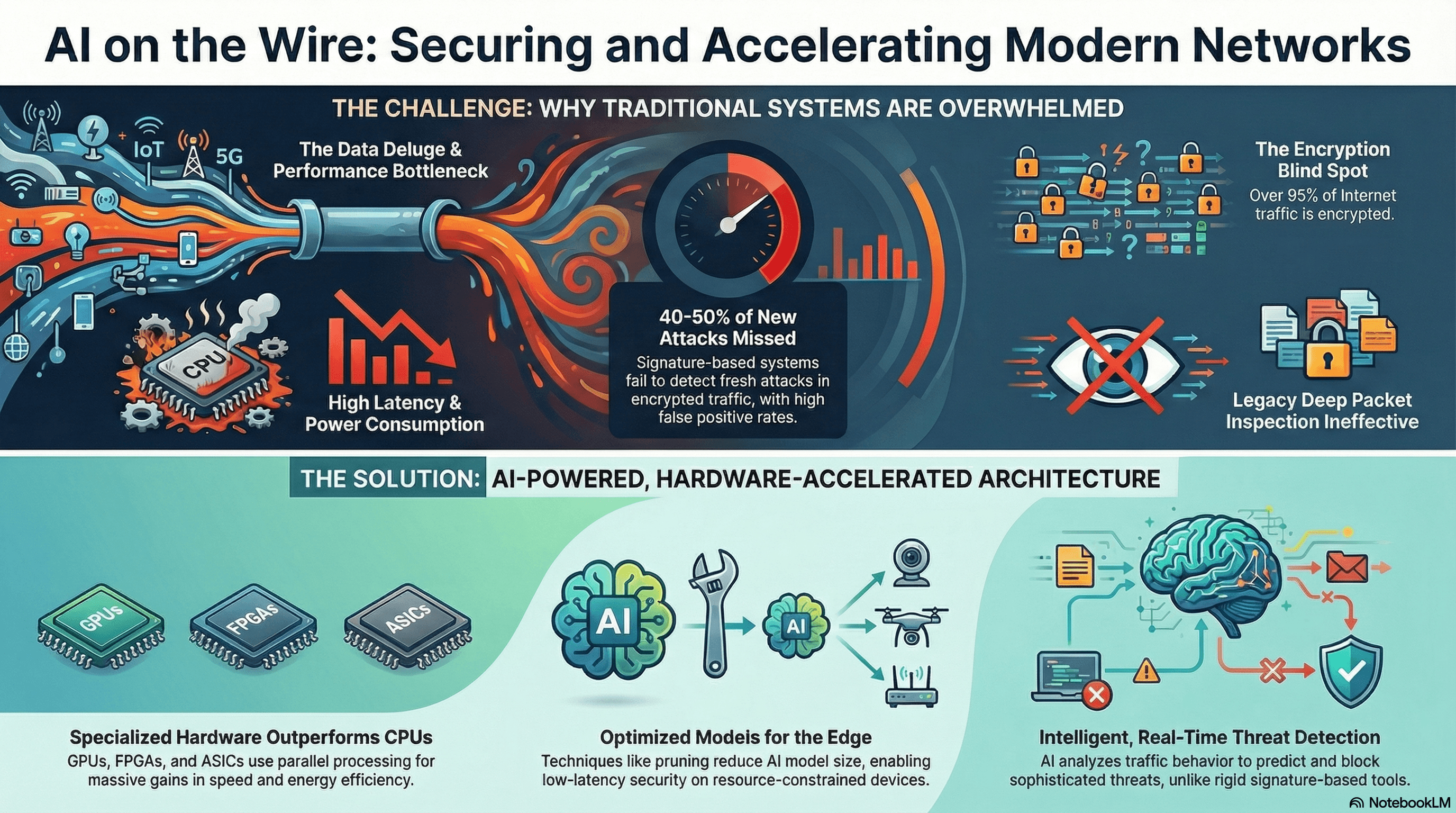

One of the primary approaches to embedding AI involves specialized hardware accelerators designed to handle AI workloads efficiently. Traditional CPUs struggle with the parallel processing demands of neural networks, leading to high latency and power consumption. Enter Neural Processing Units (NPUs), Tensor Processing Units (TPUs), and AI-optimized GPUs from leaders like NVIDIA, Intel, Qualcomm, and Apple.

- NPUs and TPUs: These chips, such as Qualcomm’s AI Engine or Google’s Edge TPU, are tailored for edge devices, enabling low-power inference. For network security, they can process vast amounts of packet data on-device, identifying anomalies without cloud dependency.

- System-on-Chip (SoC) Integration: Modern SoCs combine AI accelerators with standard processors, as seen in Arm-based designs, allowing seamless embedding in firewalls or routers. This reduces energy use - critical for always-on security hardware—and supports real-time threat detection.

In 2025, providers like Hailo and Intel’s Habana are pushing boundaries with chips delivering up to 26 TOPS (tera operations per second) at under 3W, ideal for compact network appliances.

2. Model Optimization and Compression Techniques

Embedding AI isn’t just about hardware; it’s about making AI models lightweight enough for resource-constrained environments. Techniques like quantization, pruning, and knowledge distillation shrink models without sacrificing accuracy.

- Quantization: Converts floating-point weights to integers, reducing model size by up to 75%. Tools like TensorFlow Lite and ONNX Runtime facilitate this for edge deployment.

- TinyML and Edge Impulse: Frameworks like Edge Impulse optimize models for microcontrollers, enabling AI on devices with mere kilobytes of RAM. For a smart firewall, this means embedding models that analyze network traffic for malware signatures instantly.

These methods address the historical challenges of high energy consumption and compatibility issues in embedded systems, as highlighted in recent reviews.

3. Edge Computing Architectures for Seamless Integration

Edge AI shifts processing from the cloud to the device, minimizing latency - a must for network-level security where milliseconds matter. Platforms like Cisco Unified Edge and NVIDIA’s EGX integrate AI hardware with networking stacks.

- Hybrid Edge-Cloud Models: While fully embedded AI handles immediate threats, hybrid setups allow periodic cloud updates for model retraining, ensuring adaptability to new attack vectors.

- Security Enhancements: By processing data locally, edge AI bolsters privacy and reduces vulnerability to network breaches, complying with data sovereignty regulations.

In industrial settings, such as manufacturing or aquaculture, embedded AI in edge devices is already transforming operations by enabling predictive maintenance and real-time monitoring—principles directly applicable to AI firewalls.

Case Study: AI-Embedded Firewalls in Action

Imagine a network firewall that uses embedded AI to predict and block DDoS attacks before they escalate. Companies like Advantech and Qualcomm are deploying such solutions, where AI chips analyze traffic patterns on-device, achieving up to 15 TOPS for robotics-like precision in security.

**At AS EXIM, we’re exploring these for our clients, combining hardware from top providers with custom firmware. **

The Road Ahead

As we move into 2026, expect further integration of 5G/6G connectivity with embedded AI, enabling ultra-low-latency security in IoT ecosystems. Challenges remain, like balancing power efficiency with performance, but the benefits -faster response times, cost savings, and enhanced security - are undeniable.

At AS EXIM, we’re committed to turning these approaches into tangible solutions. If you’re interested in embedding AI into your hardware, reach out - let’s innovate together!

To see the tutorial and more infographics: https://www.linkedin.com/pulse/modern-approaches-embedding-ai-hardware-game-changer-network-ylxcf/?trackingId=xtb0F6toe04dKi1mSGAzbw%3D%3D